NEW CASE: secret algorithm targets disabled people unfairly for benefit probes – cutting off life-saving cash and trapping them in call centre hell

Foxglove is supporting the Greater Manchester Coalition of Disabled People to bring a legal challenge against disabled people being forced into gruelling and invasive benefit fraud investigations on the say-so of a secret algorithm in the Department of Work and Pensions.

The coalition and Foxglove have begun a crowdjustice campaign to raise £5,000 to make this case possible. If you’re able to chip in, please hit the link below to contribute.

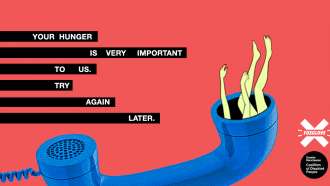

Disabled people have described how they are living in “fear of the brown envelope” hitting their doormat, alerting they have been pinpointed by the algorithm.

Others just receive a phone call without explaining why they have been flagged.

That kicks into action an automated system that forces targeted people to repeatedly explain why they need payments in an aggressive and humiliating process that can last up to a year.

Even worse, these investigations often result in people having their benefits cut off, taking away cash needed for essential needs like food, rent and energy.

‘Huge percentage’ targeted

Anecdotal evidence collected by the coalition says a “huge percentage” of the group has been hit by the DWP system.

Testimony reveals people are forced to make long and frustrating calls to call centres, dealing with confusing phone menus and unhelpful operators with no training to assist disabled and vulnerable people.

Others must fill in forms of over 80 pages that ask the same questions again and again, seemingly to provoke people with memory or cognitive issues into making mistakes that can be used as an excuse to drag out benefit reassessment.

The coalition has sent a legal letter, supported by Foxglove, demanding the government explain how the algorithm works and what, if anything, it has done to eliminate bias.

This week, Labour MP Debbie Abrahams, as a member of the Work and Pensions select committee, quizzed top DWP officials about the algorithm. They were unable to say what proportion of the people being investigated for benefit fraud are disabled, saying: “it will depend on the propensity of incorrect information that’s been provided to us.”

The coalition’s letter follows investigations by charity Privacy International, which exposed how the DWP has claimed it is using “cutting-edge artificial intelligence to crack down on organised criminal gangs committing large-scale benefit fraud.”

DWP refuses to answer questions on how people are flagged

Yet the DWP has refused to answer PI’s, or others’, questions about how people are flagged, and whether those people are disproportionately disabled, women, or black, Asian or minority ethnic peoples.

The law says that important decision systems like this must be transparent so people have the opportunity to find out whether they are being treated unfairly.

The coalition is also concerned that the benefit decisions made by the DWP’s algorithm may involve claimant data being handed over to external organisations without telling them – which may break privacy law.

UN: algorithms ‘highly likely’ to repeat biases in existing data

In 2019, a UN report into the UK’s “digital welfare state” said algorithms are “highly likely” to repeat biases reflected in existing data and make them even worse.

It added that: “in-built forms of discrimination can fatally undermine the right to social protection for key groups and individuals.”

The report called for a “concerted effort to identify and counteract such biases in designing the digital welfare state.”

If the government won’t come clean – or if the algorithm breaks the law – we will begin legal action to take them to court and get answers.

We are working with Will Perry and Conor McCarthy from Monckton Chambers on this case.

As always, if you’d like to hear more about this case, join our mailing list here – we’ll be in touch.